Lately I have been working on a job position, mostly orientated towards the system administration side. As a result of that I am working into creating some tools that help the everyday life of a developer.

Unfortunately, because that company has a legacy product (all have that, even startups!) I also had to provide some tooling for that too. As you may guess, that product was running into Windows servers. And here’s when the story starts getting interesting.

It is a Microsoft product!

From: The dev community

Yes, yes! I know. Half of the people you might ask around they are going to come back at you with that phrase. It isn’t open source, and it is a Microsoft product. And when they utter that phrase you can see their facial expression, saying it with such aversion, as if Microsoft is the devil himself, and they are the twelve apostles!

Sure, that product has its issues, but it also has some (if not very good in my humble opinion), documentation online: https://docs.microsoft.com/en-us/powershell/

Really powerful stuff, coming in from Microsoft, and the chaos that is called Windows OS… (let’s not forget Vista, Windows Millennium, Internet Explorer, and all those “successful products” we were forced to use…).

To cut to the chase

My main point is that Powershell, strives to offer some tools needed for system administrators to administer their Windows Installations. And it fails, unfortunately. As a product it is so chaotic and big, with so many different pathways you can end up being caught at. Especially if someone compares that with the simplicity of the unix counterpart. Even though, they have tried to be more effective and direct. I mean, in every modern installation of Windows 10 all you have to do is WinKey + type “Power” + Press Enter, and you are within a cli where you can start executing commands. Quite fast, and user friendly.

The problems start when you try to consolidate stuff. When you want to write different scripts that perform different tasks. When you are trying to include that awesome script you wrote, and its very essential to the grand scheme of your process. Thats when things, start to get interesting, and frankly, I think Microsoft hasn’t really put things into perspective when they started implementing that product.

For example:

I was asked from the security team to lock down user permissions into a given server. In order to do that the best way possible (since we do not want not our users to at least have a the required permissions they actually need) to create another role (or user) and assume that role to run stuff. Since the setup was old, the only option I had was use a user to do that. Which lead to the following hidden default decisions by Powershell.

I had to use this :

$username = "domainuser_name"

$securePassword = "secure_hash" | ConvertTo-SecureString

$credential = New-Object System.Management.Automation.PSCredential $username, $securePasswordIn order to assume the user and run the commands I wanted. Only problem was that I had to somehow encrypt the secure_hash using this function:

If you visit the documentation, and not read carefully the description (especially the last part of it) and jump to the usage, you will try to call it somehow like this:

$SecureString = Read-Host -AsSecureString

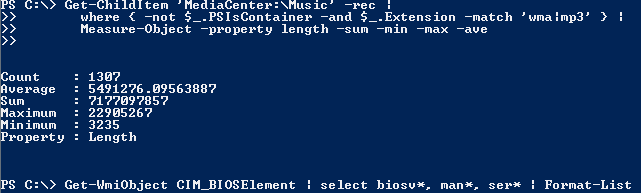

$StandardString = ConvertFrom-SecureString $SecureStringThe above will echo something like this:

Write-Host $StandardString

70006f007700650072007300680065006c006c0072006f0063006b0073003f00for the password: powershellrocks?.

Now if you take that $StandardString and you pass it in the ConvertTo-SecureString function then that will create a System.Security.SecureString object (whatever that is, I couldn’t properly inspect it…), which can be passed along as a credential to log in to Windows computers.

Now this works just fine if you run all those commands in the server you want to work with. The problems start later, when you re-provision that server (and of course you have saved that $StandardString since , the user hasn’t changed credentials, and you need that to log him in). If you hadn’t payed attention at the last subsentence of the description:

Surprise!

A quick google search of Windows Data Protection (DPAPI) and you will see its nothing more that a key storage engine that saves a butch of keys from the user. So when you are calling the function without the -Key argument, then a different key is used coming from DPAPI. And, of course the error you are getting back if you call the reverse function isn’t that descriptive either:

ConvertTo-SecureString : Input string was not in a correct format.Was it too hard to get a message like, key is invalid or decryption failed? Especially since they are using by default the hidden Windows key?

Unfortunately this goes across all PS

The guys who originally wrote Powershell, didn’t want to adhere to Explicit is better than implicit, as this is a principle used quite often in software development (see this). As being a primarily a linux user, I always loved the tools that MS was providing to Windows users. And frankly this was amazing in the past. But unfortunately, as time goes by, I am realising that the decisions they had to take while implementing those tools, weren’t as objective as the respective open source ones.

Or even when the open source guys didn’t do such a good job, and ended up creating non-useful tools, those tools were becoming deprecated quite fast. This cycle didn’t happen with Microsoft. A product had to go live, and if that product covered the needs of the users, was in fact irrelevant to whether it had to go live or not… (sounds familiar?)