Kubernetes as a Panacea: Myth or Reality?

In the rapidly evolving world of technology, few tools have garnered as much attention as Kubernetes. Often hailed as a silver bullet for a multitude of IT challenges, Kubernetes is touted as a panacea for managing containerized applications. But is this perception grounded in reality, or is it merely a myth? In this blog post, we will delve into the capabilities and limitations of Kubernetes, exploring whether it truly lives up to the hype.

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source platform designed to automate the deployment, scaling, and operation of application containers across clusters of hosts. Originally developed by Google, it is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes provides a framework to run distributed systems resiliently, handling scaling and failover for applications, and providing deployment patterns and management tools.

The Promises of Kubernetes

- Scalability: One of the most significant promises of Kubernetes is its ability to scale applications seamlessly. It can automatically adjust the number of running containers based on the current load, ensuring that applications remain responsive and efficient under varying demands.

- Resilience: Kubernetes offers robust mechanisms for managing the lifecycle of applications. It ensures that your applications are always running in the desired state, automatically restarting containers that fail or are unresponsive.

- Portability: Kubernetes abstracts away the underlying infrastructure, making it possible to run your applications on any cloud provider or on-premises environment. This portability is a key advantage for businesses looking to avoid vendor lock-in.

- Efficiency: By optimizing the use of resources through its scheduling capabilities, Kubernetes can lead to more efficient utilization of hardware, reducing costs and improving performance.

- Automation: Kubernetes automates many aspects of application management, including deployment, scaling, and operations, freeing up developers and IT staff to focus on more strategic tasks.

The Reality Check

While the promises of Kubernetes are compelling, it’s essential to recognize that it is not a magic solution that will solve all problems effortlessly. There are several considerations and challenges that organizations must be aware of:

- Complexity: Kubernetes has a steep learning curve. Its powerful features come with a complexity that can be overwhelming for teams new to container orchestration. Proper training and expertise are required to harness its full potential.

- Resource Intensive: Running a Kubernetes cluster can be resource-intensive. The control plane components and various add-ons needed for a production environment can consume significant CPU and memory, which may not be ideal for small-scale applications.

- Operational Overhead: Despite its automation capabilities, Kubernetes still requires significant operational oversight. Maintaining, updating, and securing a Kubernetes cluster involves ongoing effort and vigilance.

- Security: Kubernetes security is complex and multi-faceted. Misconfigurations can lead to vulnerabilities, and securing a cluster requires a thorough understanding of Kubernetes security best practices and continuous monitoring.

- Integration and Compatibility: Integrating Kubernetes with existing systems and workflows can be challenging. Not all applications are designed to run in a containerized environment, and some may require substantial refactoring to be compatible with Kubernetes.

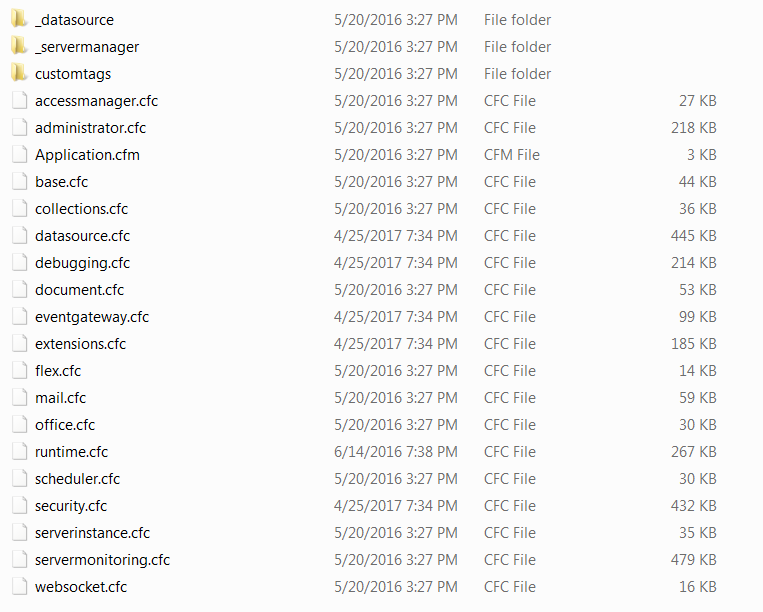

- Database Migrations: One of the notable limitations of Kubernetes is its inability to manage complex database migrations effectively. While Kubernetes excels at managing stateless applications, handling stateful components like databases and their intricate migration processes can be cumbersome. Database migrations often require precise sequencing, careful coordination, and rollback mechanisms that go beyond the orchestration capabilities of Kubernetes. These tasks often necessitate external tools and manual oversight, underscoring that Kubernetes is not a comprehensive solution for every aspect of application deployment and maintenance.

Use Istio

Attending recently a potential candidate meeting, the candidate was asked to “provide a system solution that would solve the most common issue found in a live-update system: uptime“. The requirements were simple. You have a system that is broken into two parts:

- Backend.

- Database.

Using k8s what would be the ideal setup you would choose so that you can update your Backend code, while also doing a database migration?

For example, say the backend code is compatible with the db code at major version X, what happens if you upgrade to the major version Y, and you need all your users to be serviced with 0 (literally 0), downtime?

The candidate, responded, after thinking a bit, that you need, in the between to deploy a backwards compatible version, so as to give a chance to the migration to take place.

This was unacceptable of course according to the interviewer. The answer from the interviewer was to : Use Istio.

What does this Istio thing:

- Traffic Management: Imagine you have a bunch of tiny services (like small programs) that need to talk to each other to make a larger application work, much like how team members need to communicate to complete a project. Istio acts as the “traffic controller,” making sure these messages go to the right place, in the right order, and without getting lost. It can also control how much traffic goes to each service, balance the load, and handle failures gracefully.

- Security: Istio makes sure that communication between services is secure. It’s like locking all the doors and windows in a building to ensure that only authorized people can enter. Istio automatically encrypts the data being sent between services and checks that each service is who it claims to be (authentication). It also enforces rules about who can talk to whom (authorization).

- Observability: Istio helps you keep an eye on what’s happening inside your application. It’s like having a surveillance system that monitors traffic, performance, errors, and other important metrics. Istio provides insights into how services are interacting, which can help you quickly identify and fix issues.

- Policy Enforcement: It ensures that certain rules are followed. For example, it can enforce limits on how much data one service can send to another or ensure that certain services are only accessible under specific conditions. It’s like having company policies to ensure everything runs smoothly and safely.

Ok, so my guess is that the interviewer had read the above bullet points and thought:

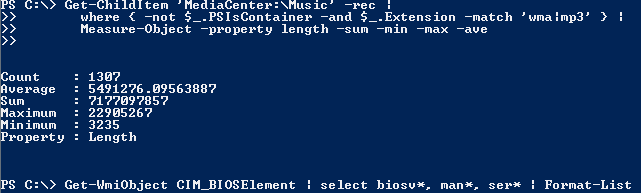

Hmmm, smart traffic management looks like can be used so that we can route traffic from the database while running a migration in a way that it would never stop servicing at all!

Well, that was correct. Except that the database is a central place that gathers all application traffic and requires a central semaphore to perform write applications. This main’s semaphore’s task is to allow or not allow one agent writing or non writing. That means, when you are running your migration and you are changing the columns of a table, the RDBMS needs to have exclusive access to that table in order to recreate it, and no one else is allowed to write there. No matter how smart you will route the pod logic and traffic, the end bottleneck would be the database.

Kubernetes: Panacea or Powerful Tool?

Kubernetes is undoubtedly a powerful tool that can transform how organizations deploy and manage applications. It offers unparalleled capabilities for scalability, resilience, and efficiency. However, it’s not a one-size-fits-all solution. The notion of Kubernetes as a panacea is a myth if taken to mean that it can solve all IT problems without effort or expertise.

The reality is that Kubernetes can provide immense benefits when used appropriately and with the right level of investment in knowledge and resources. Organizations should approach Kubernetes with a clear understanding of its strengths and limitations, ensuring they have the necessary skills and infrastructure in place to leverage its full potential.

In conclusion, Kubernetes is not a magical cure-all, but it is a powerful tool that, when used correctly, can significantly enhance the agility, reliability, and scalability of modern applications. By setting realistic expectations and preparing adequately, organizations can avoid the pitfalls and maximize the advantages of adopting Kubernetes.