Being a Computer Science master’s graduate, I had also being taught courses about Artificial Intelligence. Back then though, those were pretty basic. In ’08, all that was described as AI, was merely some algorithms that were designed to solve or calculate a specific set of problems.

Most common problem I can remember from back then was to write an algorithm that solves Euler’s 8 queens problem, or an algorithm for a permutation of the common puzzle of Hanoi Tower.

The theory behind the AI, was fairly simple. Algorithms and Data Structures, and actually it stopped there! No statistics, nothing fancy from a mathematical point. I can still remember BFS and DFS, but those were thoroughly taught at a separate lesson, called “Algorithms and Data Structures”.

Another aspect that was taught at that lesson, was how to use A* in a graph diagram, or a tree diagram, in order to reduce the possible branches in a potential “Artificial Intelligence” solution employed in an application. But frankly, the use of todays tools needed to create a serious AI application, weren’t the subject of that course.

“Pattern Recognition” was the most similar course that seems to be related to todays AI applications. This course was indeed a more “control theory” oriented course that was the grand-father of todays pattern recognition. Regression and discrete mathematics were the basic subjects of this course. Not probabilistic theory. Not even statistics. Now, after 15 years it has changed radically.

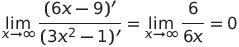

Last night, I decided to ask a very basic calculus question, the kind that 17 year old students are being taught. The subject was limits, and given some mathematical function, to calculate the limit of that function while the values approach the infinite.

A trip to Infinity

A small parenthesis here. I have watched the documentary from Netflix, called “A trip to Infinity“, which was amazing! The stories explained in that documentary were very well presented. The “Infinite Hotel”, with infinite many rooms, which always has a room available, and the manager who visits all the rooms just in 1 minute. The Japanese Godzilla story was also very good. Later, physics are also involved and that abstract mathematical concept, called infinity is being applied to our real world universe. Definitely recommended, and I have to say I was very impressed with the work they did!

However, this is something that unfortunately cannot be easily represented in the limited space of a LLM AI application such as ChatGPT. I think in general the Calculus quizzes cannot be answered that well via that model. The reason, is, that it requires a huge amount of data (one would argue infinite?) to actually grasp the concept of something that big as infinity. Even at the very first lessons of my masters, we were taught that a computing machine cannot grasp the concept of infinity.

As simple as doing a division by zero, will raise an exception or make any program crass (I’ll write a blog post sometime why this in PHP created a few hundred thousand dollars losses, once in a blue moon), ChatGPT also crashed when I tried repeatedly to request it to calculate the simple limit:

This is what it replied with:

When I “devised” the above quiz, I had in mind this:

The above rule merely says the following:

If after calculating separately the limits of the numerator and the denominator you reach to a paradox like:

or :

and if we assume the denominator to be a function that is continuous and differentiable, and the same happens to the numerator as well, then the above limit gets transformed to:

Interesting…

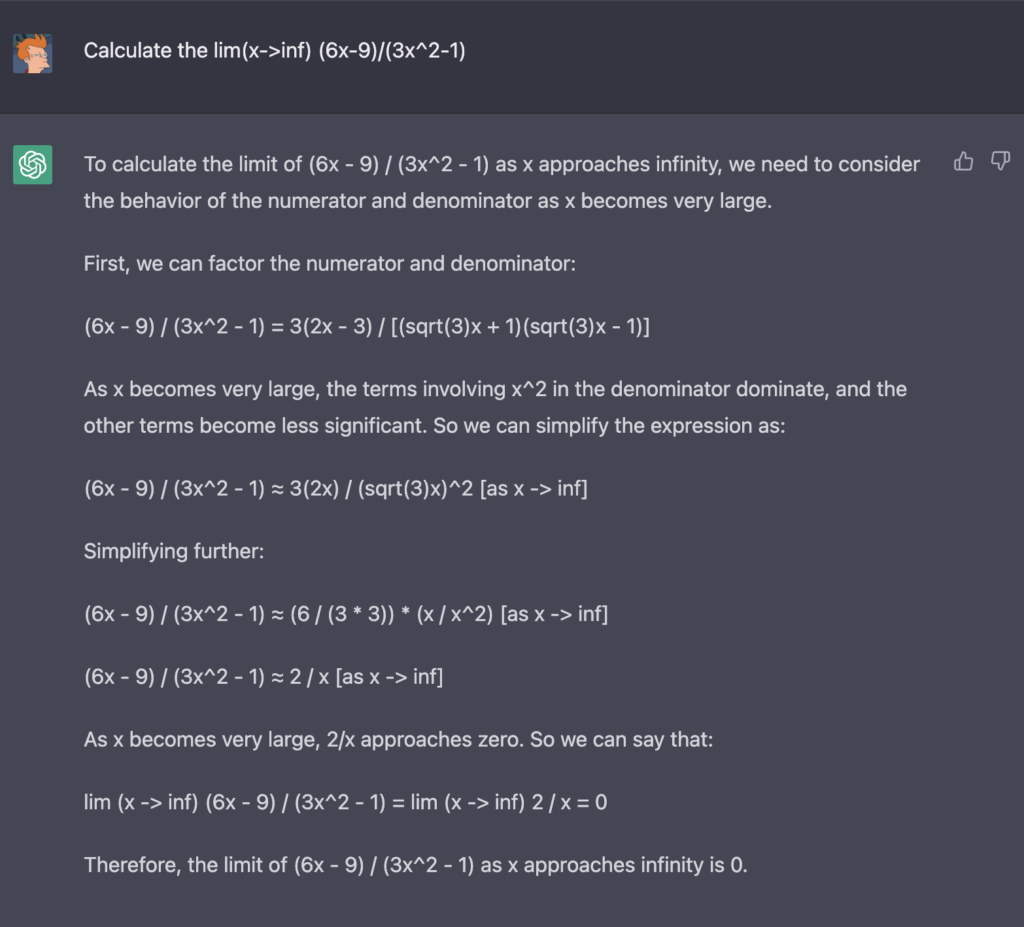

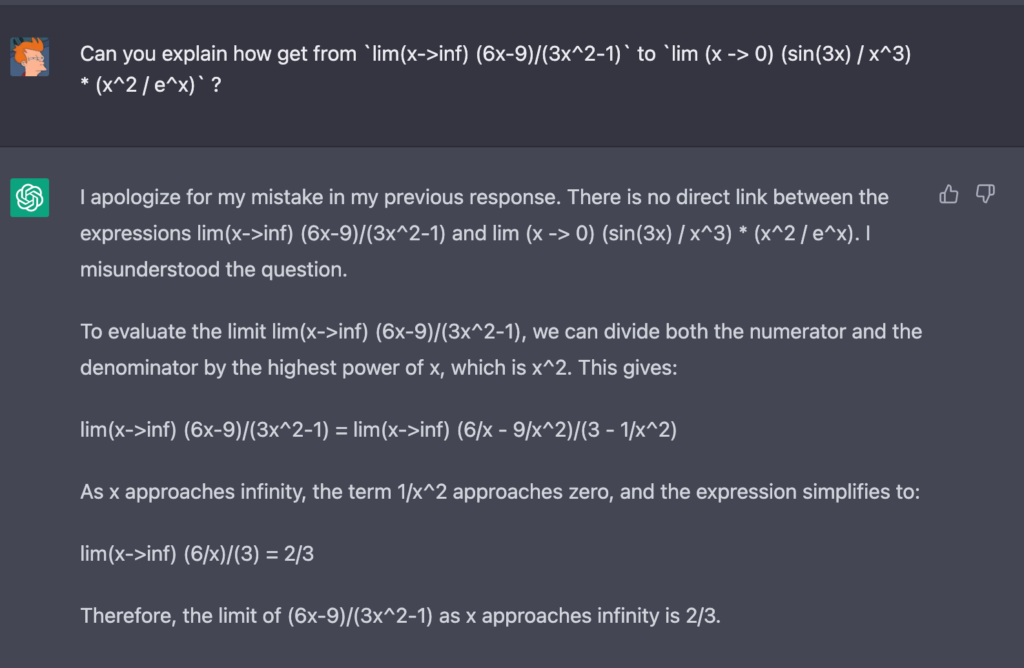

Therefore, I saw the response and I quizzically asked:

And there you have it. ChatGTP completely flunked the response. The function for the limit calculation changed radically into a sinusoidal function (who knows why? some neuron had different weights and thats the first thing that connected with the question?).

Luckily the response was the same, and correct still, at 0.

I decided to give it another chance:

Again, even though it acknowledged the defect, and it made it even worse! Now it calculated it to 2/3, which is completely wrong.

That’s where I decided to stop playing with ChatGPT and Basic Calculus. I believe it is actually very well written, but, when it involves abstract thinking, we have a long – long way to go…

Conclusion

If you are a calculus student and you want to cheat your homework with ChatGPT, do think it twice. You might get a correct answer result (after all there are a few stuff we can’t calculate with calculatory methods), but the proof of that answer would be mathematically incorrect!